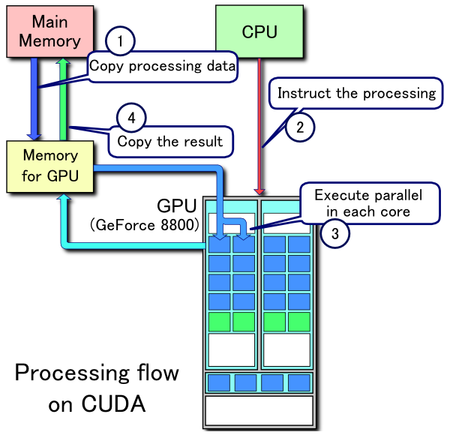

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

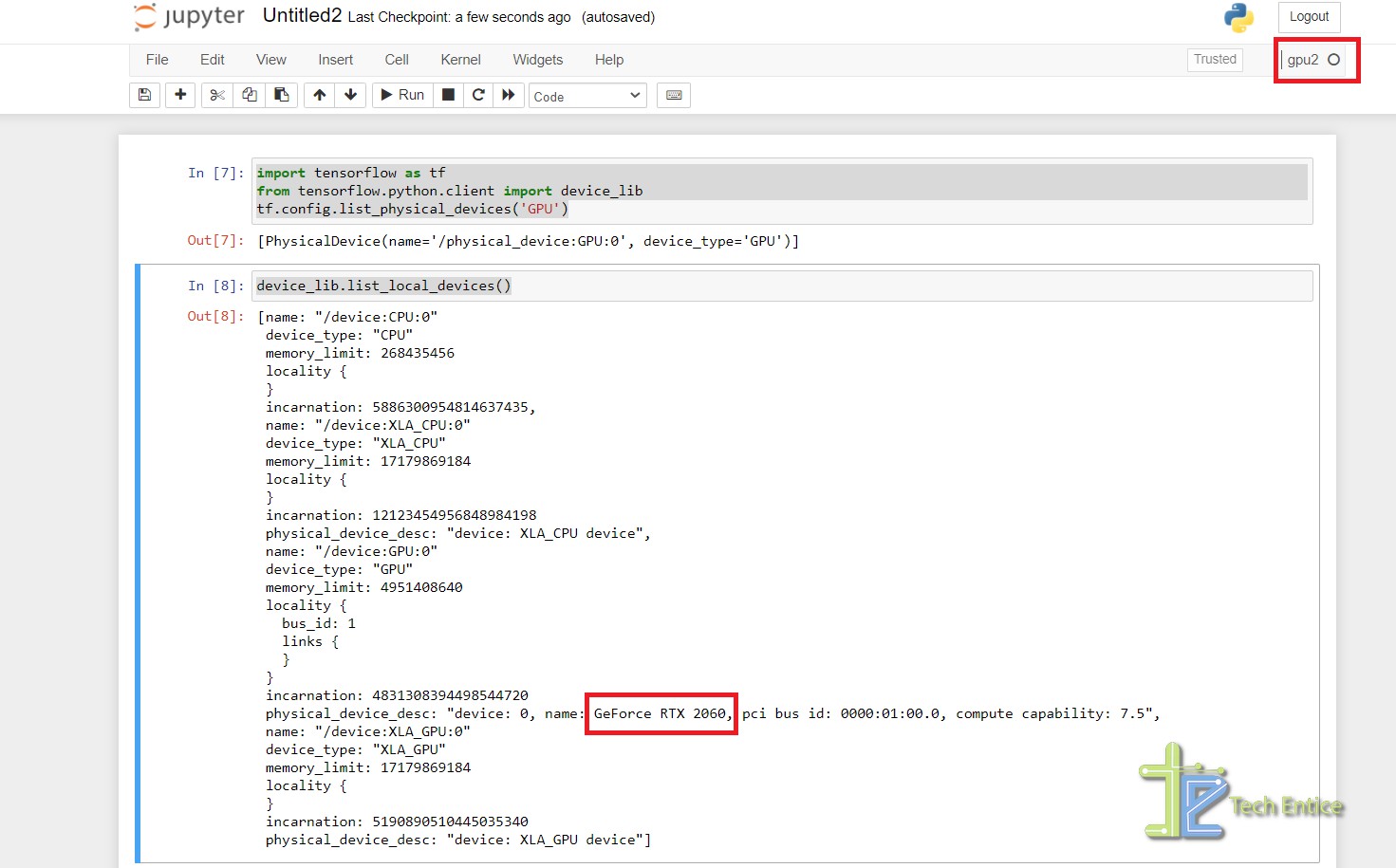

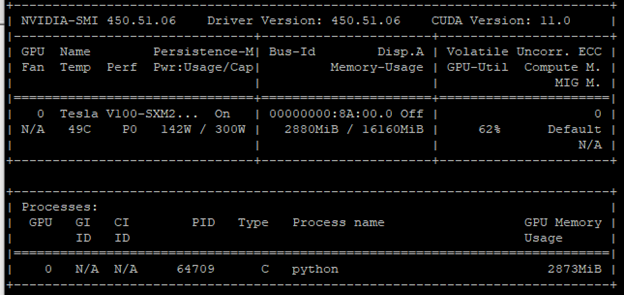

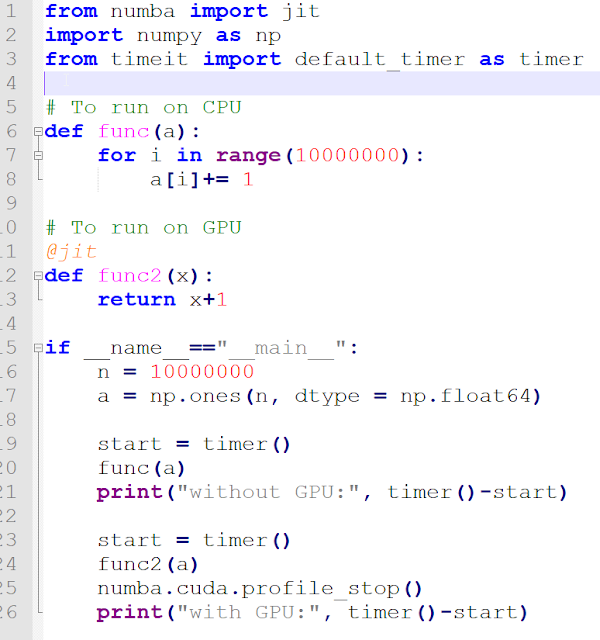

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

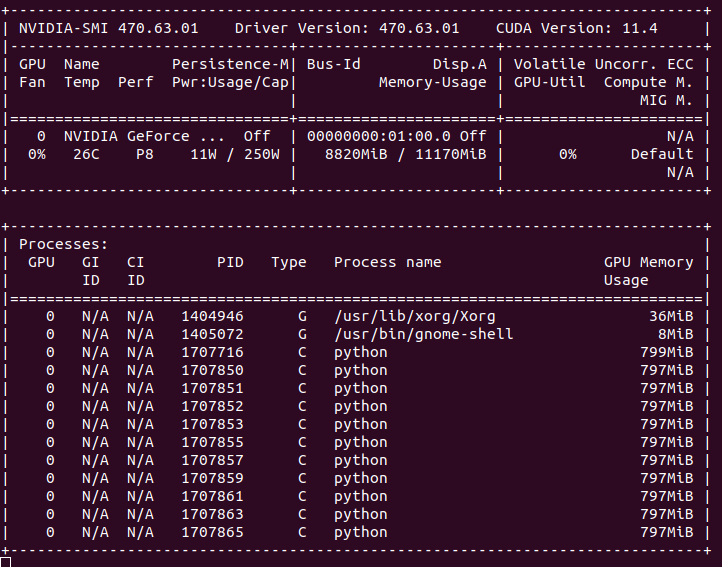

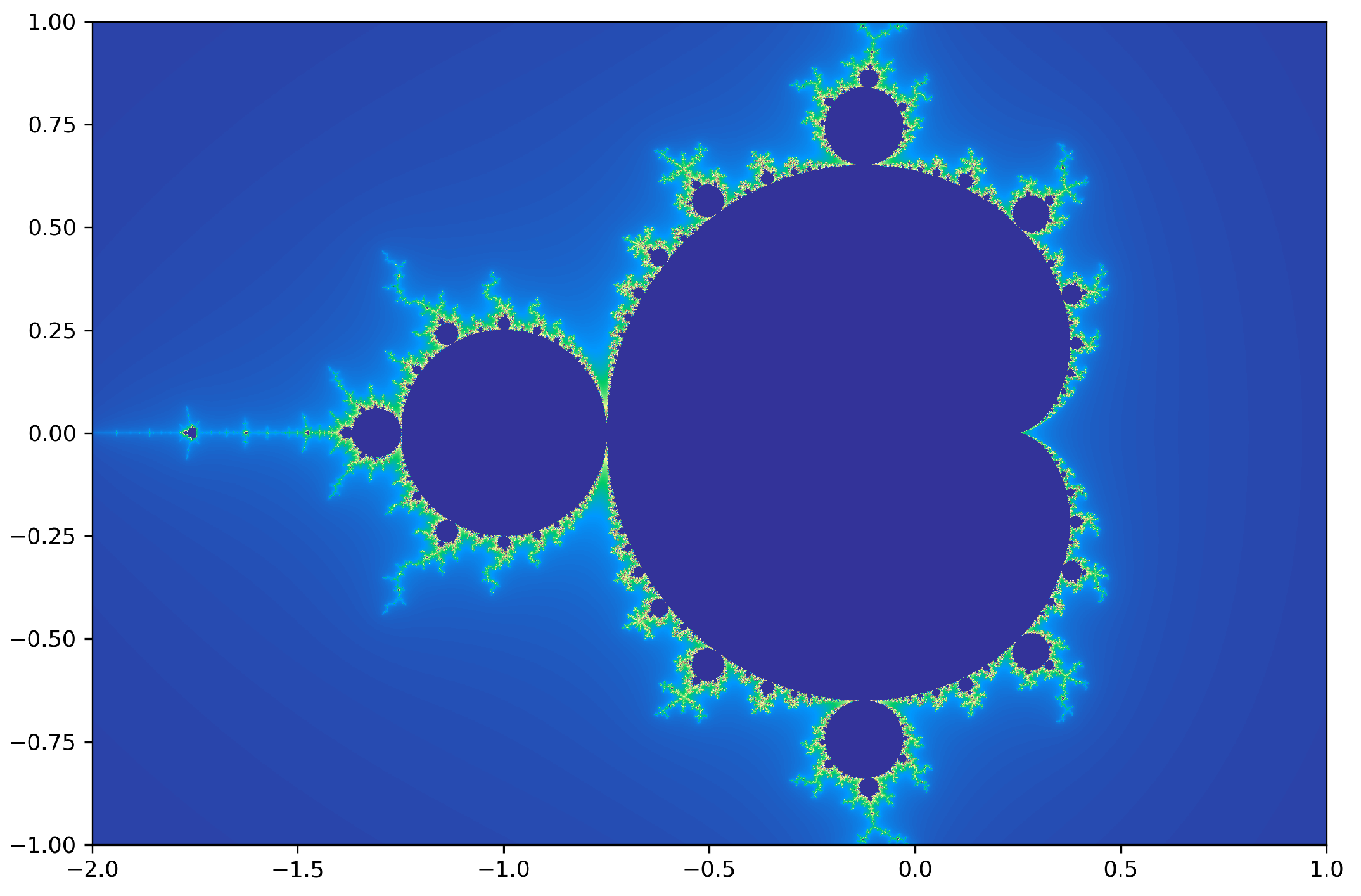

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

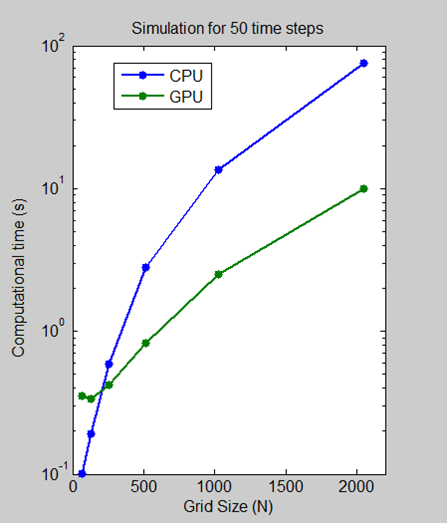

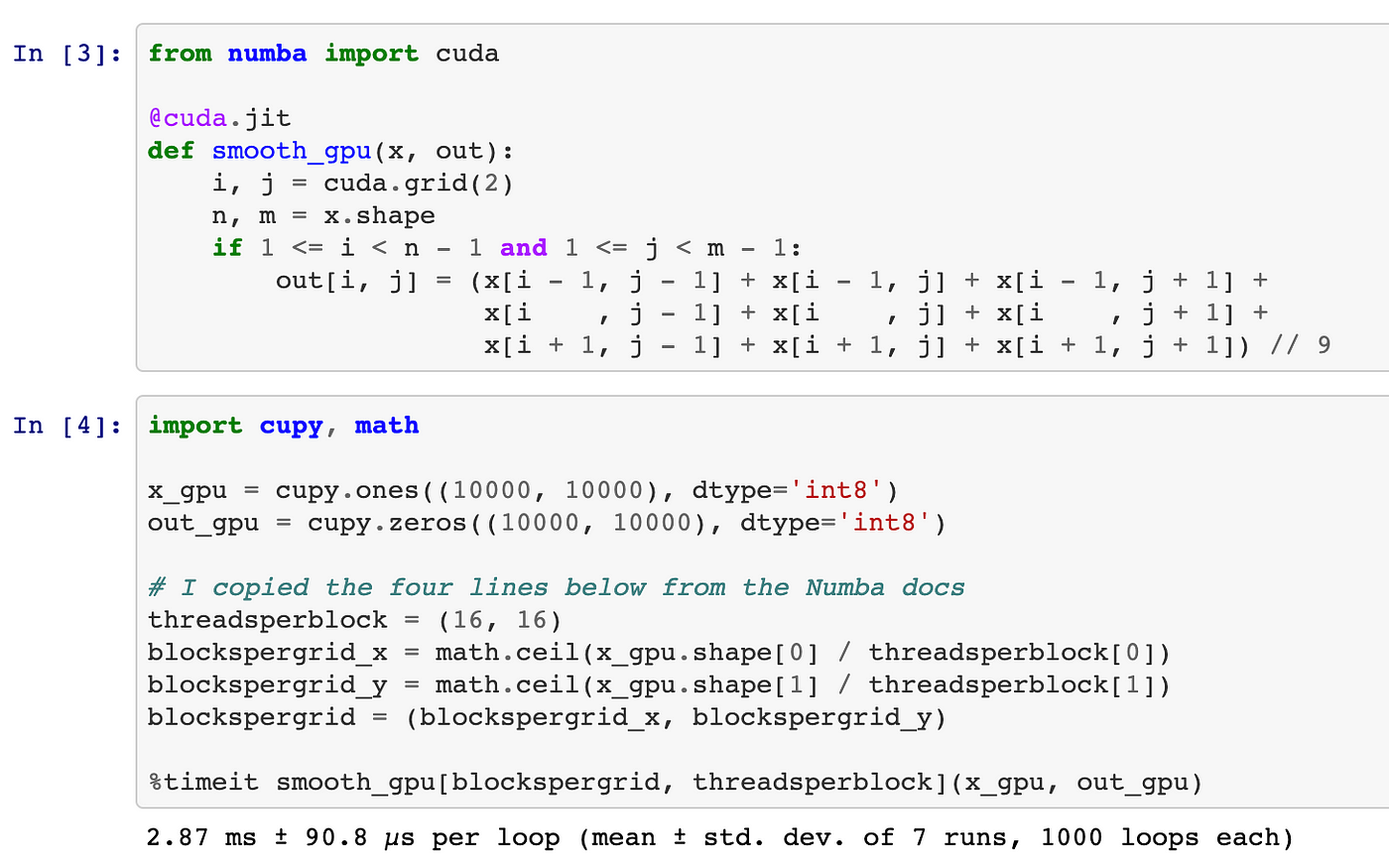

Computation | Free Full-Text | GPU Computing with Python: Performance, Energy Efficiency and Usability | HTML

Hands-On GPU Computing with Python: Explore the capabilities of GPUs for solving high performance computational problems : Bandyopadhyay, Avimanyu: Amazon.es: Libros

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science